Robots in kitchens are no longer science fiction. From Shanghai to New York, they can be found flipping burgers, making dosas, or preparing stir-fries. However, their abilities are limited to strictly following pre-programmed instructions, similar to how robots have functioned for the past 50 years.

Enter Ishika Singh, a Ph.D. student in computer science, who envisions a robot that can truly make dinner. This robot would navigate a kitchen, find ingredients, and assemble them into delicious dishes, a seemingly simple task even for a child. But for robots, it's a complex feat requiring knowledge of the specific kitchen, adaptability, and resourcefulness – qualities beyond the reach of current robot programming.

The limitation lies in the traditional "classical planning pipeline" used by roboticists. This approach involves defining every action, its conditions, and its effect in detail, essentially creating a rigid script for the robot to follow. Even with extensive effort, this method fails to account for unexpected situations not foreseen in the program.

The dinner-making robot needs a more dynamic approach. It needs to be aware of the specific cuisine it's cooking (what constitutes "spicy" in this culture?), the layout of the kitchen (is there a hidden rice cooker?), and the preferences of the people it's serving (extra hungry from a workout?), all while adapting to surprises like dropped ingredients.

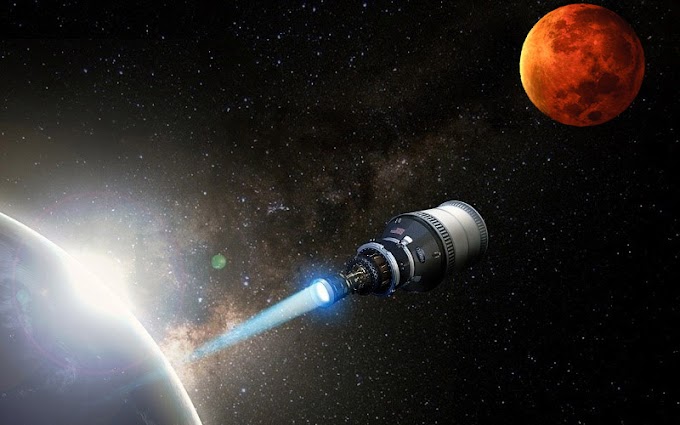

Jesse Thomason, Singh's Ph.D. supervisor, calls this goal a "moonshot" – a seemingly impossible but highly desired achievement. The ability to automate any human chore through robots would revolutionize industries and simplify daily life.

However, despite impressive robot advancements in warehouses, healthcare, and even entertainment, none possess anything close to human flexibility and adaptability. "Classical robotics is brittle," explains Naganand Murty, CEO of a landscaping robot company. The constant changes in weather, terrain, and owner preferences require robots to function outside pre-programmed scripts, a challenge they currently struggle with.

For decades, robots lacked the "canny, practical brain" needed for real-world tasks. This changed in 2022 with the introduction of ChatGPT, a user-friendly interface for a "large language model" (LLM) called GPT-3. LLMs, trained on massive amounts of text and code, can generate human-quality text, answering questions and mimicking human conversation.

LLMs possess the knowledge that robots lack. They have access to vast information about food, kitchens, and recipes, enabling them to answer almost any question a robot might have about transforming ingredients into a meal.

This combination of an LLM's knowledge and a robot's physical capabilities holds immense potential. As one 2022 paper puts it, "the robot can act as the language model's 'hands and eyes,' while the language model supplies high-level semantic knowledge about the task."

While many use LLMs for entertainment or tasks like homework, some roboticists see them as a key to unlocking robots' potential beyond pre-programming. This prospect has sparked a race across industries and academia to find the best ways to integrate LLMs with robots.

However, some are skeptical of LLMs, citing their occasional biases, factual errors, and privacy concerns. They argue that these "hallucinations" and susceptibility to manipulation make them unsuitable for integration with robots.

Despite these concerns, companies like Levatas are already implementing LLMs in their robots. Their industrial robot dog, equipped with ChatGPT, can understand spoken instructions, answer questions, and follow commands, eliminating the need for specialized training for operators.

While Levatas's robot demonstrates progress, it operates within specific, controlled settings. It wouldn't be able to handle tasks like playing fetch or figuring out how to use unfamiliar ingredients.

A key limitation of robots is their limited perception and action capabilities. They rely on sensors like cameras and microphones to gather information and employ a limited range of movements with arms, wheels, or grippers. This restricts their ability to interact with the environment as effectively as humans.

Machine learning, while powerful, offers another approach. Unlike LLMs, it doesn't rely on understanding words but rather learns from patterns in data. This allows it to excel at specific tasks like protein folding or job candidate selection.

LLMs, however, stand out for their versatility. They can communicate and generate text about anything, unlike machine learning models confined to specific tasks. However, it's important to remember that their responses are predictions based on their training data, not true understanding.

The sheer size and complexity of LLM training data is key to their effectiveness. OpenAI's GPT-4, for example, is estimated to have over a trillion parameters – adjustable aspects of the model that allow it to learn and adapt. This vast amount

Discover:

The Fourth Age:

Smart Robots, Conscious Computers, and the Future of Humanity

"Timely, highly informative, and certainly optimistic." ― Booklist